Writing Interpreters in Rust: a Guide

Welcome!

In this book we will walk through the basics of interpreted language implementation in Rust with a focus on the challenges that are specific to using Rust.

At a glance, these are:

- A custom allocator for use in an interpreter

- A safe-Rust wrapper over allocation

- A compiler and VM that interact with the above two layers

The goal of this book is not to cover a full featured language but rather to provide a solid foundation on which you can build further features. Along the way we'll implement as much as possible in terms of our own memory management abstractions rather than using Rust std collections.

Level of difficulty

Bob Nystrom's Crafting Interpreters is recommended introductory reading to this book for beginners to the topic. Bob has produced a high quality, accessible work and while there is considerable overlap, in some ways this book builds on Bob's work with some additional complexity, optimizations and discussions of Rust's safe vs unsafe.

We hope you find this book to be informative!

Further reading and other projects to study:

All the links below are acknowledged as inspiration or prior art.

Interpreters

- Bob Nystrom's Crafting Interpreters

- The Inko programming language

- kyren - piccolo and gc-arena

Memory management

- Richard Jones, Anthony Hosking, Elliot Moss - The Garbage Collection Handbook

- Stephen M. Blackburn & Kathryn S. McKinley - Immix: A Mark-Region Garbage Collector with Space Efficiency, Fast Collection, and Mutator Performance

- Felix S Klock II - GC and Rust Part 0: Garbage Collection Background

- Felix S Klock II - GC and Rust Part 1: Specifying the Problem

- Felix S Klock II - GC and Rust Part 2: The Roots of the Problem

Allocators

This section gives an overview and implementation detail of allocating blocks of memory.

What this is not: a custom allocator to replace the global Rust allocator

Alignment

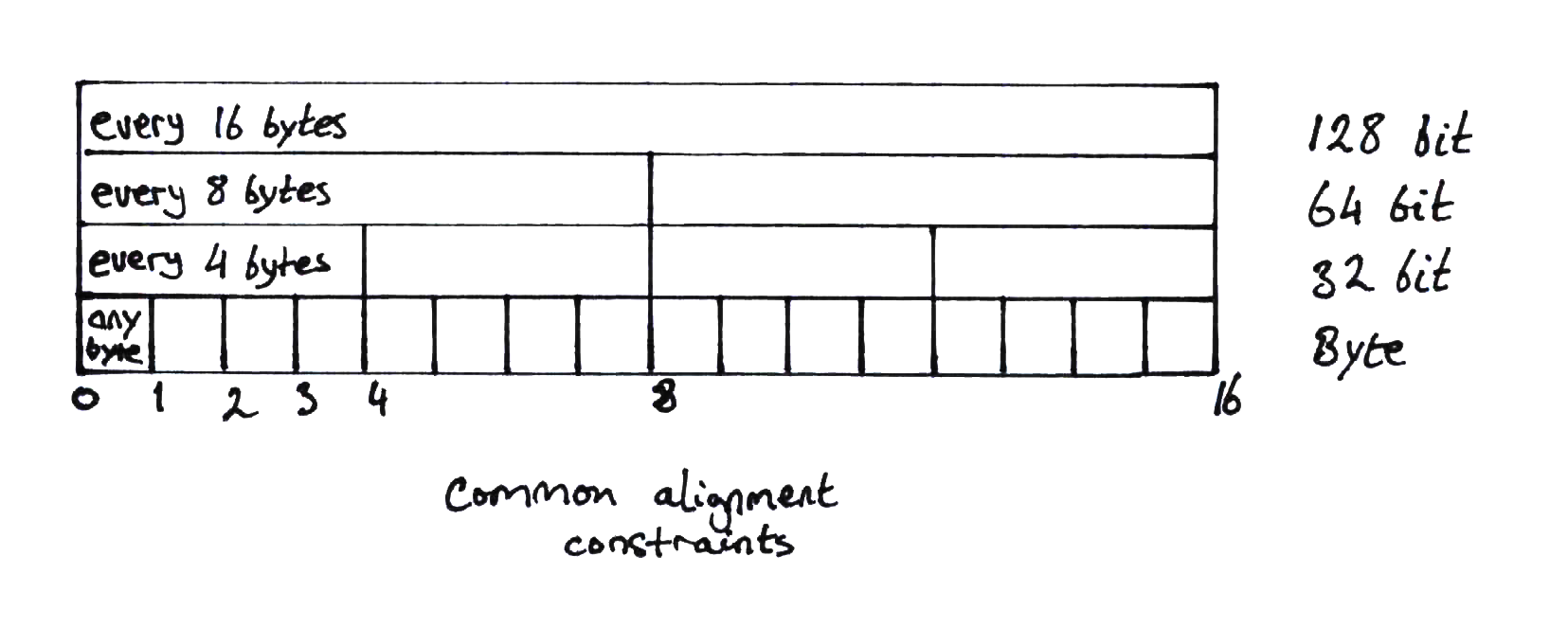

There are subtleties in memory access alignment:

- Some hardware architectures and implementations may fault on unaligned memory access.

- Atomic operations require word-aligned access.

- SIMD operations typically require double-word-aligned access.

- In practice on 64 bit architectures, allocators align objects to 8 byte boundaries for 64 bit objects and smaller and 16 byte boundaries for larger objects for performance optimization and the above reasons.

Intel 32 and 64 bit x86 architectures allow general access to be unaligned but will probably incur an access penalty. The story on 32bit ARM and aarch64 is sufficiently similar but there is a higher chance that an ARM core is configured to raise a bus error on a misaligned access.

Another very important factor is atomic memory operations. Atomic access works on a whole word basis - any unaligned access by nature cannot be guaranteed to be atomic as it will probably involve more than one access. To support atomic operations, alignment must be minmally on word boundaries.

SIMD operations, tending to be 128 bits wide or higher, should be aligned to 16 byte boundaries for optimal code generation and performance. Unaligned loads and stores may be allowed but normally these incur performance penalties.

While Intel allows unaligned access (that is, alignment on any byte boundary), the recommended (see section 3.6.4) alignment for objects larger than 64 bits is to 16 byte boundaries.

Apparently system malloc() implementations

tend to comply

with the 16 byte boundary.

To verify the above, a rough test of both the system allocator and jemalloc

on x86_64 by using Box::new() on a set of types (u8, u16, u32, u64,

String and a larger struct) confirms a minimum of 8 byte alignment for

anything word size or smaller and 16 byte alignment for everything bigger.

Sample pointer printouts below are for jemalloc but Linux libc malloc produced

the same pattern:

p=0x7fb78b421028 u8

p=0x7fb78b421030 u16

p=0x7fb78b421038 u32

p=0x7fb78b421050 u64

p=0x7fb78b420060 "spam"

p=0x7fb78b4220f0 Hoge { y: 2, z: "ほげ", x: 1 }

Compare with std::mem::align_of<T>() which, on x86_64 for example,

returns alignment values:

u8: 1 byteu16: 2 bytesu32: 4 bytesu64: 8 bytes- any bigger struct: 8

Thus despite the value of std::mem::align_of::<T>(), mature allocators will

do what is most pragmatic and follow recommended practice in support of optimal

performance.

With all that in mind, to keep things simple, we'll align everything to a double-word boundaries. When we add in prepending an object header, the minimum memory required for an object will be two words anyway.

Thus, the allocated size of an object will be calculated1 by

let alignment = size_of::<usize>() * 2;

// mask out the least significant bits that correspond to the alignment - 1

// then add the full alignment

let size = (size_of::<T>() & !(alignment - 1)) + alignment;

For a more detailed explanation of alignment adjustment calculations, see phil-opp's kernel heap allocator.

Obtaining Blocks of Memory

When requesting blocks of memory at a time, one of the questions is what is the desired block alignment?

- In deciding, one factor is that using an alignment that is a multiple of the page size can make it easier to return memory to the operating system.

- Another factor is that if the block is aligned to it's size, it is fast to do bitwise arithmetic on a pointer to an object in a block to compute the block boundary and therefore the location of any block metadata.

With both these in mind we'll look at how to allocate blocks that are aligned to the size of the block.

A basic crate interface

A block of memory is defined as a base address and a size, so we need a struct that contains these elements.

To wrap the base address pointer, we'll use the recommended type for building

collections, std::ptr::NonNull<T>,

which is available on stable.

pub struct Block {

ptr: BlockPtr,

size: BlockSize,

}

Where BlockPtr and BlockSize are defined as:

pub type BlockPtr = NonNull<u8>;

pub type BlockSize = usize;

To obtain a Block, we'll create a Block::new() function which, along with

Block::drop(), is implemented internally by wrapping the stabilized Rust alloc

routines:

pub fn new(size: BlockSize) -> Result<Block, BlockError> {

if !size.is_power_of_two() {

return Err(BlockError::BadRequest);

}

Ok(Block {

ptr: internal::alloc_block(size)?,

size,

})

}

Where parameter size must be a power of two, which is validated on the first

line of the function. Requiring the block size to be a power of two means

simple bit arithmetic can be used to find the beginning and end of a block in

memory, if the block size is always the same.

Errors take one of two forms, an invalid block-size or out-of-memory, both

of which may be returned by Block::new().

#[derive(Debug, PartialEq)]

pub enum BlockError {

/// Usually means requested block size, and therefore alignment, wasn't a

/// power of two

BadRequest,

/// Insufficient memory, couldn't allocate a block

OOM,

}

Now on to the platform-specific implementations.

Custom aligned allocation on stable Rust

On the stable rustc channel we have access to some features of the Alloc API.

This is the ideal option since it abstracts platform specifics for us, we do not need to write different code for Unix and Windows ourselves.

Fortunately there is enough stable functionality to fully implement what we need.

With an appropriate underlying implementation this code should compile and

execute for any target. The allocation function, implemented in the internal

mod, reads:

pub fn alloc_block(size: BlockSize) -> Result<BlockPtr, BlockError> {

unsafe {

let layout = Layout::from_size_align_unchecked(size, size);

let ptr = alloc(layout);

if ptr.is_null() {

Err(BlockError::OOM)

} else {

Ok(NonNull::new_unchecked(ptr))

}

}

}

Once a block has been allocated, there is no safe abstraction at this level

to access the memory. The Block will provide a bare pointer to the beginning

of the memory and it is up to the user to avoid invalid pointer arithmetic

and reading or writing outside of the block boundary.

pub fn as_ptr(&self) -> *const u8 {

self.ptr.as_ptr()

}

Deallocation

Again, using the stable Alloc functions:

pub fn dealloc_block(ptr: BlockPtr, size: BlockSize) {

unsafe {

let layout = Layout::from_size_align_unchecked(size, size);

dealloc(ptr.as_ptr(), layout);

}

}

The implementation of Block::drop() calls the deallocation function

for us so we can create and drop Block instances without leaking memory.

Testing

We want to be sure that the system level allocation APIs do indeed return block-size-aligned blocks. Checking for this is straightforward.

A correctly aligned block should have it's low bits

set to 0 for a number of bits that represents the range of the block

size - that is, the block size minus one. A bitwise XOR will highlight any

bits that shouldn't be set:

// the block address bitwise AND the alignment bits (size - 1) should

// be a mutually exclusive set of bits

let mask = size - 1;

assert!((block.ptr.as_ptr() as usize & mask) ^ mask == mask);

The type of allocation

Before we start writing objects into Blocks, we need to know the nature of

the interface in Rust terms.

If we consider the global allocator in Rust, implicitly available via

Box::new(), Vec::new() and so on, we'll notice that since the global

allocator is available on every thread and allows the creation of new

objects on the heap (that is, mutation of the heap) from any code location

without needing to follow the rules of borrowing and mutable aliasing,

it is essentially a container that implements Sync and the interior

mutability pattern.

We need to follow suit, but we'll leave Sync for advanced chapters.

An interface that satisfies the interior mutability property, by borrowing the allocator instance immutably, might look like:

trait AllocRaw {

fn alloc<T>(&self, object: T) -> *const T;

}

naming it AllocRaw because when layering on top of Block we'll

work with raw pointers and not concern ourselves with the lifetime of

allocated objects.

It will become a little more complex than this but for now, this captures the essence of the interface.

An allocator: Sticky Immix

Quickly, some terminology:

- Mutator: the thread of execution that writes and modifies objects on the heap.

- Live objects: the graph of objects that the mutator can reach, either directly from it's stack or indirectly through other reachable objects.

- Dead objects: any object that is disconnected from the mutator's graph of live objects.

- Collector: the thread of execution that identifies objects that are no longer reachable by the mutator and marks them as free space that can be reused

- Fragmentation: as objects have many different sizes, after allocating and freeing many objects, gaps of unused memory appear between objects that are too small for most objects but that add up to a measurable percentage of wasted space.

- Evacuation: when the collector moves live objects to another block of memory so that the originating block can be de_fragmented

About Immix

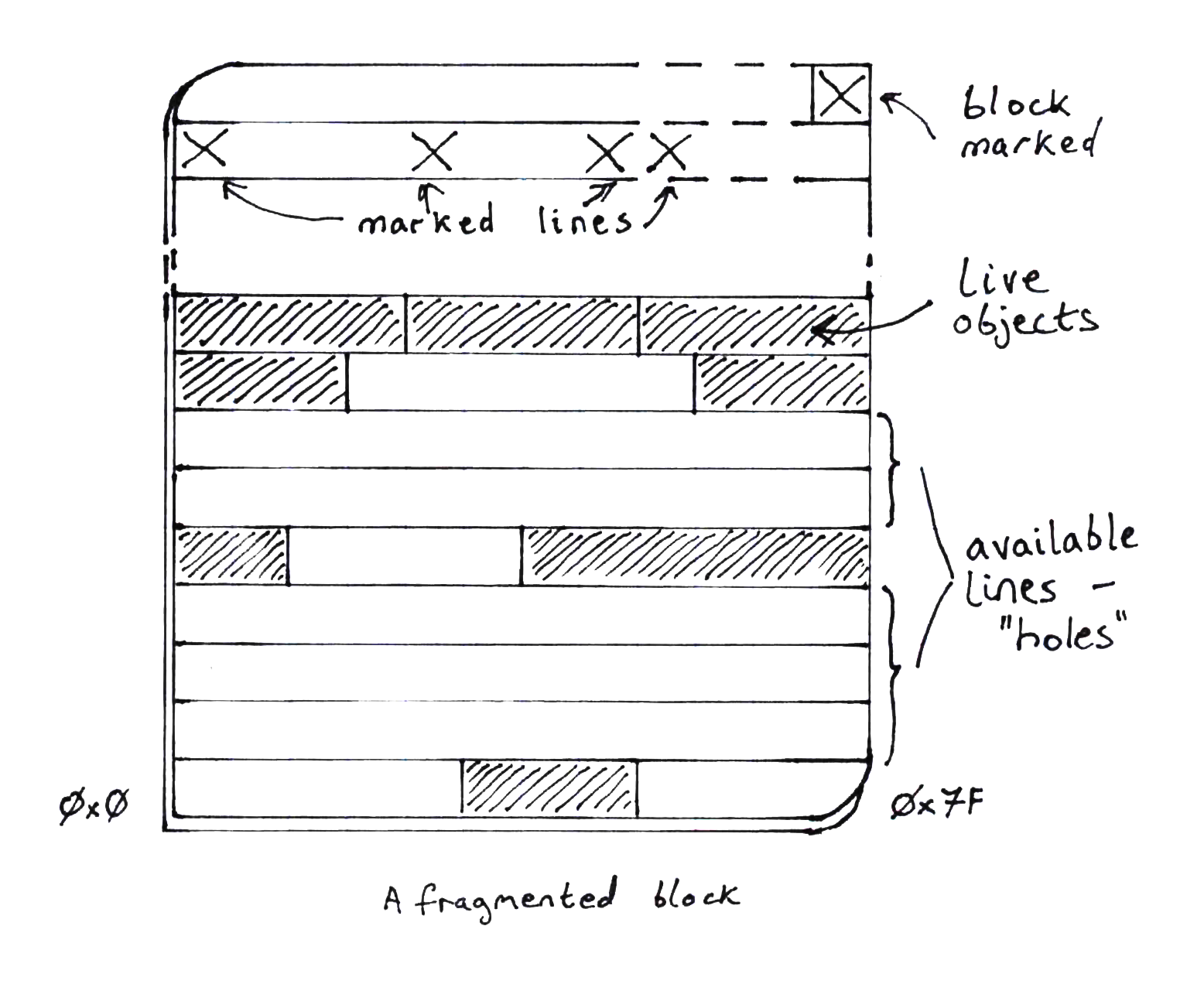

Immix is a memory management scheme that considers blocks of fixed size at a time. Each block is divided into lines. In the original paper, blocks are sized at 32k and lines at 128 bytes. Objects are allocated into blocks using bump allocation and objects can cross line boundaries.

During tracing to discover live objects, objects are marked as live, but the line, or lines, that each object occupies are also marked as live. This can mean, of course, that a line may contain a dead object and a live object but the whole line is marked as live.

To mark lines as live, a portion of the block is set aside for line mark bits, usually one byte per mark bit. If any line is marked as live, the whole block is also marked as live. There must also, therefore, be a bit that indicates block liveness.

Conservative marking

The Immix authors found that marking every line that contains a live object could be expensive. For example, many small objects might cross line boundaries, requiring two lines to be marked as live. This would require looking up the object size and calculating whether the object crosses the boundary into the next line. To save CPU cycles, they simplified the algorithm by saying that any object that fits in a line might cross into the next line so we will conservatively consider the next line marked just in case. This sped up marking at little fragmentation expense.

Collection

During collection, only lines not marked as live are considered available for re-use. Inevitably then, there is acceptance of some amount of fragmentation at this point.

Full Immix implements evacuating objects out of the most fragmented blocks into fresh, empty blocks, for defragmentation.

For simplicity of implementation, we'll leave out this evacuation operation in this guide. This is called Sticky Immix.

We'll also stick to a single thread for the mutator and collector to avoid the complexity overhead of a multi-threaded implementation for now.

Recommended reading: Stephen M. Blackburn & Kathryn S. McKinley - Immix: A Mark-Region Garbage Collector with Space Efficiency, Fast Collection, and Mutator Performance

About this part of the book

This section will describe a Rust crate that implements a Sticky Immix heap. As part of this implementation we will dive into the crate API details to understand how we can define an interface between the heap and the language VM that will come later.

What this is not: custom memory management to replace the global Rust allocator! The APIs we arrive at will be substantially incompatible with the global Rust allocator.

Bump allocation

Now that we can get blocks of raw memory, we need to write objects into it. The simplest way to do this is to write objects into a block one after the other in consecutive order. This is bump allocation - we have a pointer, the bump pointer, which points at the space in the block after the last object that was written. When the next object is written, the bump pointer is incremented to point to the space after that object.

In a twist of mathematical convenience, though, it is more efficient to bump allocate from a high memory location downwards. We will do that.

We will used a fixed power-of-two block size. The benefit of this is that given a pointer to an object, by zeroing the bits of the pointer that represent the block size, the result points to the beginning of the block. This will be useful later when implementing garbage collection.

Our block size will be 32k, a reasonably optimal size arrived at in the original Immix paper. This size can be any power of two though and different use cases may show different optimal sizes.

pub const BLOCK_SIZE_BITS: usize = 15;

pub const BLOCK_SIZE: usize = 1 << BLOCK_SIZE_BITS;

Now we'll define a struct that wraps the block with a bump pointer and garbage collection metadata:

pub struct BumpBlock {

cursor: *const u8,

limit: *const u8,

block: Block,

meta: BlockMeta,

}

Bump allocation basics

In this struct definition, there are two members that we are interested in

to begin with. The other two, limit and meta, will be discussed in the

next section.

cursor: this is the bump pointer. In our implementation it is the index into the block where the last object was written.block: this is theBlockitself in which objects will be written.

Below is a start to a bump allocation function:

impl BumpBlock {

pub fn inner_alloc(&mut self, alloc_size: usize) -> Option<*const u8> {

let block_start_ptr = self.block.as_ptr() as usize;

let cursor_ptr = self.cursor as usize;

// align to word boundary

let align_mask = usize = !(size_of::<usize>() - 1);

let next_ptr = cursor_ptr.checked_sub(alloc_size)? & align_mask;

if next_ptr < block_start_ptr {

// allocation would start lower than block beginning, which means

// there isn't space in the block for this allocation

None

} else {

self.cursor = next_ptr as *const u8;

Some(next_ptr)

}

}

}

In our function, the alloc_size parameter should be a number of bytes of

memory requested.

The value of alloc_size may produce an unaligned pointer at which to write the

object. Fortunately, by bump allocating downward we can apply a simple mask to the

pointer to align it down to the nearest word:

let align_mask = usize = !(size_of::<usize>() - 1);

In initial implementation, allocation will simply return None if the block

does not have enough capacity for the requested alloc_size. If there is

space, it will be returned as a Some(*const u8) pointer.

Note that this function does not write the object to memory, it merely

returns a pointer to an available space. Writing the object will require

invoking the std::ptr::write function. We will do that in a separate module

but for completeness of this chapter, this might look something like:

use std::ptr::write;

unsafe fn write<T>(dest: *const u8, object: T) {

write(dest as *mut T, object);

}

Some time passes...

After allocating and freeing objects, we will have gaps between objects in a block that can be reused. The above bump allocation algorithm is unaware of these gaps so we'll have to modify it before it can allocate into fragmented blocks.

To recap, in Immix, a block is divided into lines and only whole lines are considered for reuse. When objects are marked as live, so are the lines that an object occupies. Therefore, only lines that are not marked as live are usable for allocation into. Even if a line is only partially allocated into, it is not a candidate for further allocation.

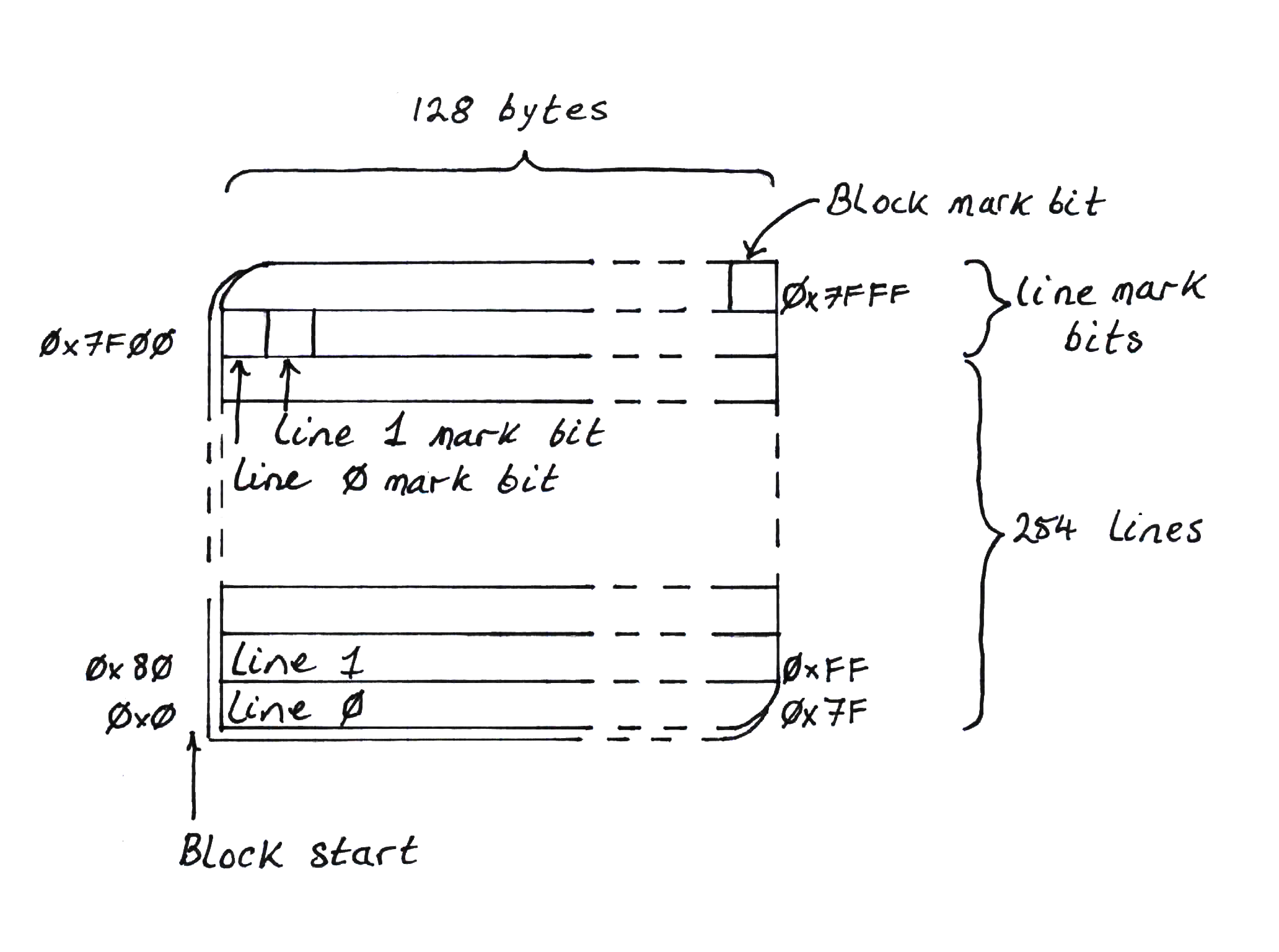

In our implementation we will use the high bytes of the Block to represent

these line mark bits, where each line is represented by a single byte.

We'll need a data structure to represent this. we'll call it BlockMeta,

but first some constants that we need in order to know

- how big a line is

- how many lines are in a block

- how many bytes remain in the

Blockfor allocating into

pub const LINE_SIZE_BITS: usize = 7;

pub const LINE_SIZE: usize = 1 << LINE_SIZE_BITS;

// How many total lines are in a block

pub const LINE_COUNT: usize = BLOCK_SIZE / LINE_SIZE;

// We need LINE_COUNT number of bytes for marking lines, so the capacity of a block

// is reduced by that number of bytes.

pub const BLOCK_CAPACITY: usize = BLOCK_SIZE - LINE_COUNT;

For clarity, let's put some numbers to the definitions we've made so far:

- A block size is 32Kbytes

- A line is 128 bytes long

- The number of lines within a 32Kbyte

Blockis 256

Therefore the top 256 bytes of a Block are used for line mark bits. Since

these line mark bits do not need to be marked themselves, the last two bytes

of the Block are not needed to mark lines.

This leaves one last thing to mark: the entire Block. If any line in the

Block is marked, then the Block is considered to be live and must be marked

as such.

We use the final byte of the Block to store the Block mark bit.

The definition of BumpBlock contains member meta which is of type

BlockMeta. We can now introduce the definition of BlockMeta which we simply

need to represent a pointer to the line mark section at the end of the Block:

pub struct BlockMeta {

lines: *mut u8,

}

This pointer could be easily calculated, of course, so this is just a handy shortcut.

Allocating into a fragmented Block

The struct BlockMeta contains one function we will study:

/// When it comes to finding allocatable holes, we bump-allocate downward.

pub fn find_next_available_hole(

&self,

starting_at: usize,

alloc_size: usize,

) -> Option<(usize, usize)> {

// The count of consecutive avaliable holes. Must take into account a conservatively marked

// hole at the beginning of the sequence.

let mut count = 0;

let starting_line = starting_at / constants::LINE_SIZE;

let lines_required = (alloc_size + constants::LINE_SIZE - 1) / constants::LINE_SIZE;

// Counting down from the given search start index

let mut end = starting_line;

for index in (0..starting_line).rev() {

let marked = unsafe { *self.lines.add(index) };

if marked == 0 {

// count unmarked lines

count += 1;

if index == 0 && count >= lines_required {

let limit = index * constants::LINE_SIZE;

let cursor = end * constants::LINE_SIZE;

return Some((cursor, limit));

}

} else {

// This block is marked

if count > lines_required {

// But at least 2 previous blocks were not marked. Return the hole, considering the

// immediately preceding block as conservatively marked

let limit = (index + 2) * constants::LINE_SIZE;

let cursor = end * constants::LINE_SIZE;

return Some((cursor, limit));

}

// If this line is marked and we didn't return a new cursor/limit pair by now,

// reset the hole search state

count = 0;

end = index;

}

}

None

}

The purpose of this function is to locate a gap of unmarked lines of sufficient

size to allocate an object of size alloc_size into.

The input to this function, starting_at, is the offset into the block to start

looking for a hole.

If no suitable hole is found, the return value is None.

If there are unmarked lines lower in memory than the starting_at point (bump

allocating downwards), the return value will be a pair of numbers: (cursor, limit) where:

cursorwill be the new bump pointer valuelimitwill be the lower bound of the available hole.

A deeper dive

Our first variable is a counter of consecutive available lines. This count will always assume that the first line in the sequence is conservatively marked and won't count toward the total, unless it is line 0.

let mut count = 0;

Next, the starting_at and alloc_size arguments have units of bytes but we

want to use line count math, so conversion must be done.

let starting_line = starting_at / constants::LINE_SIZE;

let lines_required = (alloc_size + constants::LINE_SIZE - 1) / constants::LINE_SIZE;

Our final variable will be the end line that, together with starting_line,

will mark the boundary of the hole we hope to find.

let mut end = starting_line;

Now for the loop that identifies holes and ends the function if either:

- a large enough hole is found

- no suitable hole is found

We iterate over lines in decreasing order from starting_line down to line zero

and fetch the mark bit into variable marked.

for index in (0..starting_line).rev() {

let marked = unsafe { *self.lines.add(index) };

If the line is unmarked, we increment our consecutive-unmarked-lines counter.

Then we reach the first termination condition: we reached line zero and we have a large enough hole for our object. The hole extents can be returned, converting back to byte offsets.

if marked == 0 {

count += 1;

if index == 0 && count >= lines_required {

let limit = index * constants::LINE_SIZE;

let cursor = end * constants::LINE_SIZE;

return Some((cursor, limit));

}

} else {

Otherwise if the line is marked, we've reached the end of the current hole (if we were even over one.)

Here, we have the second possible termination condition: we have a large enough hole for our object. The hole extents can be returned, taking the last line as conservatively marked.

This is seen in adding 2 to index:

- 1 for walking back from the current marked line

- plus 1 for walking back from the previous conservatively marked line

If this condition isn't met, our search is reset - count is back to zero and

we keep iterating.

} else {

if count > lines_required {

let limit = (index + 2) * constants::LINE_SIZE;

let cursor = end * constants::LINE_SIZE;

return Some((cursor, limit));

}

count = 0;

end = index;

}

Finally, if iterating over lines reached line zero without finding a hole, we

return None to indicate failure.

}

None

}

Making use of the hole finder

We'll return to the BumpBlock::inner_alloc() function now to make use of

BlockMeta and its hole finding operation.

The BumpBlock struct contains two more members: limit and meta. These

should now be obvious - limit is the known byte offset limit into which

we can allocate, and meta is the BlockMeta instance associated with the

block.

We need to update inner_alloc() with a new condition:

- the size being requested must fit between

self.cursorandself.limit

(Note that for a fresh, new block, self.limit is set to the block size.)

If the above condition is not met, we will call

BlockMeta::find_next_available_hole() to get a new cursor and limit

to try, and repeat that until we've either found a big enough hole or

reached the end of the block, exhausting our options.

The new definition of BumpBlock::inner_alloc() reads as follows:

pub fn inner_alloc(&mut self, alloc_size: usize) -> Option<*const u8> {

let ptr = self.cursor as usize;

let limit = self.limit as usize;

let next_ptr = ptr.checked_sub(alloc_size)? & constants::ALLOC_ALIGN_MASK;

if next_ptr < limit {

let block_relative_limit =

unsafe { self.limit.sub(self.block.as_ptr() as usize) } as usize;

if block_relative_limit > 0 {

if let Some((cursor, limit)) = self

.meta

.find_next_available_hole(block_relative_limit, alloc_size)

{

self.cursor = unsafe { self.block.as_ptr().add(cursor) };

self.limit = unsafe { self.block.as_ptr().add(limit) };

return self.inner_alloc(alloc_size);

}

}

None

} else {

self.cursor = next_ptr as *const u8;

Some(self.cursor)

}

}

and as you can see, this implementation is recursive.

Wrapping this up

At the beginning of this chapter we stated that given a pointer to an object, by zeroing the bits of the pointer that represent the block size, the result points to the beginning of the block.

We'll make use of that now.

During the mark phase of garbage collection, we will need to know which line or

lines to mark, in addition to marking the object itself. We will make a copy of

the BlockMeta instance pointer in the 0th word of the memory block so that

given any object pointer, we can obtain the BlockMeta instance.

In the next chapter we'll handle multiple BumpBlocks so that we can keep

allocating objects after one block is full.

Allocating into Multiple Blocks

Let's now zoom out of the fractal code soup one level and begin arranging multiple blocks so we can allocate - in theory - indefinitely.

Lists of blocks

We'll need a new struct for wrapping multiple blocks:

struct BlockList {

head: Option<BumpBlock>,

overflow: Option<BumpBlock>,

rest: Vec<BumpBlock>,

}

Immix maintains several lists of blocks. We won't include them all in the first iteration but in short they are:

free: a list of blocks that contain no objects. These blocks are held at the ready to allocate into on demandrecycle: a list of blocks that contain some objects but also at least one line that can be allocated intolarge: not a list of blocks, necessarily, but a list of objects larger than the block size, or some other method of accounting for large objectsrest: the rest of the blocks that have been allocated into but are not suitable for recycling

In our first iteration we'll only keep the rest list of blocks and two blocks

to immediately allocate into. Why two? To understand why, we need to understand

how Immix thinks about object sizes.

Immix and object sizes

We've seen that there are two numbers that define granularity in Immix: the block size and the line size. These numbers give us the ability to categorize object sizes:

- small: those that (with object header and alignment overhead) fit inside a line

- medium: those that (again with object header and alignment overhead) are larger than one line but smaller than a block

- large: those that are larger than a block

In the previous chapter we described the basic allocation algorithm: when an object is being allocated, the current block is scanned for a hole between marked lines large enough to allocate into. This does seem like it could be inefficient. We could spend a lot of CPU cycles looking for a big enough hole, especially for a medium sized object.

To avoid this, Immix maintains a second block, an overflow block, to allocate medium objects into that don't fit the first available hole in the main block being allocated into.

Thus two blocks to immediately allocate into:

head: the current block being allocated intooverflow: a block kept handy for writing medium objects into that don't fit theheadblock's current hole

We'll be ignoring large objects for now and attending only to allocating small and medium objects into blocks.

Instead of recycling blocks with holes, or maintaining a list of pre-allocated free blocks, we'll allocate a new block on demand whenever we need more space. We'll get to identifying holes and recyclable blocks in a later chapter.

Managing the overflow block

Generally in our code for this book, we will try to default to not allocating memory unless it is needed. For example, when an array is instantiated, the backing storage will remain unallocated until a value is pushed on to it.

Thus in the definition of BlockList, head and overflow are Option

types and won't be instantiated except on demand.

For allocating into the overflow block we'll define a function in the

BlockList impl:

impl BlockList {

fn overflow_alloc(&mut self, alloc_size: usize) -> Result<*const u8, AllocError> {

...

}

}

The input constraint is that, since overflow is for medium objects, alloc_size

must be less than the block size.

The logic inside will divide into three branches:

- We haven't got an overflow block yet -

self.overflowisNone. In this case we have to instantiate a new block (since we're not maintaining a list of preinstantiated free blocks yet) and then, since that block is empty and we have a medium sized object, we can expect the allocation to succeed.match self.overflow { Some ..., None => { let mut overflow = BumpBlock::new()?; // object size < block size means we can't fail this expect let space = overflow .inner_alloc(alloc_size) .expect("We expected this object to fit!"); self.overflow = Some(overflow); space } } - We have an overflow block and the object fits. Easy.

match self.overflow { // We already have an overflow block to try to use... Some(ref mut overflow) => { // This is a medium object that might fit in the current block... match overflow.inner_alloc(alloc_size) { // the block has a suitable hole Some(space) => space, ... } }, None => ... } - We have an overflow block but the object does not fit. Now we simply

instantiate a new overflow block, adding the old one to the

restlist (in future it will make a good candidate for recycing!). Again, since we're writing a medium object into a block, we can expect allocation to succeed.match self.overflow { // We already have an overflow block to try to use... Some(ref mut overflow) => { // This is a medium object that might fit in the current block... match overflow.inner_alloc(alloc_size) { Some ..., // the block does not have a suitable hole None => { let previous = replace(overflow, BumpBlock::new()?); self.rest.push(previous); overflow.inner_alloc(alloc_size).expect("Unexpected error!") } } }, None => ... }

In this logic, the only error can come from failing to create a new block.

On success, at this level of interface we continue to return a *const u8

pointer to the available space as we're not yet handling the type of the

object being allocated.

You may have noticed that the function signature for overflow_alloc takes a

&mut self. This isn't compatible with the interior mutability model

of allocation. We'll have to wrap the BlockList struct in another struct

that handles this change of API model.

The heap struct

This outer struct will provide the external crate interface and some further implementation of block management.

The crate interface will require us to consider object headers and so in the

struct definition below there is reference to a generic type H that

the user of the heap will define as the object header.

pub struct StickyImmixHeap<H> {

blocks: UnsafeCell<BlockList>,

_header_type: PhantomData<*const H>,

}

Since object headers are not owned directly by the heap struct, we need a

PhantomData instance to associate with H. We'll discuss object headers

in a later chapter.

Now let's focus on the use of the BlockList.

The instance of BlockList in the StickyImmixHeap struct is wrapped in an

UnsafeCell because we need interior mutability. We need to be able to

borrow the BlockList mutably while presenting an immutable interface to

the outside world. Since we won't be borrowing the BlockList in multiple

places in the same call tree, we don't need RefCell and we can avoid it's

runtime borrow checking.

Allocating into the head block

We've already taken care of the overflow block, now we'll handle allocation

into the head block. We'll define a new function:

impl StickyImmixHeap {

fn find_space(

&self,

alloc_size: usize,

size_class: SizeClass,

) -> Result<*const u8, AllocError> {

let blocks = unsafe { &mut *self.blocks.get() };

...

}

}

This function is going to look almost identical to the alloc_overflow()

function defined earlier. It has more or less the same cases to walk through:

headblock isNone, i.e. we haven't allocated a head block yet. Allocate one and write the object into it.- We have

Some(ref mut head)inhead. At this point we divert from thealloc_overflow()function and query the size of the object - if this is is a medium object and the current hole between marked lines in theheadblock is too small, call intoalloc_overflow()and return.

Otherwise, continue to allocate intoif size_class == SizeClass::Medium && alloc_size > head.current_hole_size() { return blocks.overflow_alloc(alloc_size); }headand return. - We have

Some(ref mut head)inheadbut this block is unable to accommodate the object, whether medium or small. We must append the current head to therestlist and create a newBumpBlockto allocate into.

There is one more thing to mention. What about large objects? We'll cover those

in a later chapter. Right now we'll make it an error to try to allocate a large

object by putting this at the beginning of the StickyImmixHeap::inner_alloc()

function:

if size_class == SizeClass::Large {

return Err(AllocError::BadRequest);

}

Where to next?

We have a scheme for finding space in blocks for small and medium objects and so, in the next chapter we will define the external interface to the crate.

Defining the allocation API

Let's look back at the allocator prototype API we defined in the introductory chapter.

trait AllocRaw {

fn alloc<T>(&self, object: T) -> *const T;

}

This will quickly prove to be inadequate and non-idiomatic. For starters, there is no way to report that allocation failed except for perhaps returning a null pointer. That is certainly a workable solution but is not going to feel idiomatic or ergonomic for how we want to use the API. Let's make a couple changes:

trait AllocRaw {

fn alloc<T>(&self, object: T) -> Result<RawPtr<T>, AllocError>;

}

Now we're returning a Result, the failure side of which is an error type

where we can distinguish between different allocation failure modes. This is

often not that useful but working with Result is far more idiomatic Rust

than checking a pointer for being null. We'll allow for distinguishing between

Out Of Memory and an allocation request that for whatever reason is invalid.

#[derive(Copy, Clone, Debug, PartialEq)]

pub enum AllocError {

/// Some attribute of the allocation, most likely the size requested,

/// could not be fulfilled

BadRequest,

/// Out of memory - allocating the space failed

OOM,

}

The second change is that instead of a *const T value in the success

discriminant we'll wrap a pointer in a new struct: RawPtr<T>. This wrapper

will amount to little more than containing a std::ptr::NonNull instance

and some functions to access the pointer.

pub struct RawPtr<T: Sized> {

ptr: NonNull<T>,

}

This'll be better to work with on the user-of-the-crate side.

It'll also make it easier to modify internals or even swap out entire implementations. This is a motivating factor for the design of this interface as we'll see as we continue to amend it to account for object headers now.

Object headers

The purpose of an object header is to provide the allocator, the language runtime and the garbage collector with information about the object that is needed at runtime. Typical data points that are stored might include:

- object size

- some kind of type identifier

- garbage collection information such as a mark flag

We want to create a flexible interface to a language while also ensuring that the interpreter will provide the information that the allocator and garbage collector in this crate need.

We'll define a trait for the user to implement.

pub trait AllocHeader: Sized {

/// Associated type that identifies the allocated object type

type TypeId: AllocTypeId;

/// Create a new header for object type O

fn new<O: AllocObject<Self::TypeId>>(size: u32, size_class: SizeClass, mark: Mark) -> Self;

/// Create a new header for an array type

fn new_array(size: ArraySize, size_class: SizeClass, mark: Mark) -> Self;

/// Set the Mark value to "marked"

fn mark(&mut self, value: Mark);

/// Get the current Mark value

fn mark_is(&self, value: Mark) -> bool;

/// Get the size class of the object

fn size_class(&self) -> SizeClass;

/// Get the size of the object in bytes

fn size(&self) -> u32;

/// Get the type of the object

fn type_id(&self) -> Self::TypeId;

}

Now we have a bunch more questions to answer! Some of these trait methods are

straightforward - fn size(&self) -> u32 returns the object size; mark()

and is_marked() must be GC related. Some are less obvious, such as

new_array() which we'll cover at the end of this chapter.

But this struct references some more types that must be defined and explained.

Type identification

What follows is a set of design trade-offs made for the purposes of this book; there are many ways this could be implemented.

The types described next are all about sharing compile-time and runtime object type information between the allocator, the GC and the interpreter.

We ideally want to make it difficult for the user to make mistakes with this and leak undefined behavior. We would also prefer this to be a safe-Rust interface, while at the same time being flexible enough for the user to make interpreter-appropriate decisions about the header design.

First up, an object header implementation must define an associated type

pub trait AllocHeader: Sized {

type TypeId: AllocTypeId;

}

where AllocTypeId is define simply as:

pub trait AllocTypeId: Copy + Clone {}

This means the interpreter is free to implement a type identifier type however it pleases, the only constraint is that it implements this trait.

Next, the definition of the header constructor,

pub trait AllocHeader: Sized {

...

fn new<O: AllocObject<Self::TypeId>>(

size: u32,

size_class: SizeClass,

mark: Mark

) -> Self;

...

}

refers to a type O that must implement AllocObject which in turn must refer

to the common AllocTypeId. The generic type O is the object for which the

header is being instantiated for.

And what is AllocObject? Simply:

pub trait AllocObject<T: AllocTypeId> {

const TYPE_ID: T;

}

In summary, we have:

AllocHeader: a trait that the header type must implementAllocTypeId: a trait that a type identifier must implementAllocObject: a trait that objects that can be allocated must implement

An example

Let's implement a couple of traits to make it more concrete.

The simplest form of type identifier is an enum. Each discriminant describes a type that the interpreter will use at runtime.

#[derive(PartialEq, Copy, Clone)]

enum MyTypeId {

Number,

String,

Array,

}

impl AllocTypeId for MyTypeId {}

A hypothetical numeric type for our interpreter with the type identifier as associated constant:

struct BigNumber {

value: i64

}

impl AllocObject<MyTypeId> for BigNumber {

const TYPE_ID: MyTypeId = MyTypeId::Number;

}

And finally, here is a possible object header struct and the implementation of

AllocHeader::new():

struct MyHeader {

size: u32,

size_class: SizeClass,

mark: Mark,

type_id: MyTypeId,

}

impl AllocHeader for MyHeader {

type TypeId = MyTypeId;

fn new<O: AllocObject<Self::TypeId>>(

size: u32,

size_class: SizeClass,

mark: Mark

) -> Self {

MyHeader {

size,

size_class,

mark,

type_id: O::TYPE_ID,

}

}

...

}

These would all be defined and implemented in the interpreter and are not

provided by the Sticky Immix crate, while all the functions in the trait

AllocHeader are intended to be called internally by the allocator itself,

not on the interpreter side.

The types SizeClass and Mark are provided by this crate and are enums.

The one drawback to this scheme is that it's possible to associate an incorrect type id constant with an object. This would result in objects being misidentified at runtime and accessed incorrectly, likely leading to panics.

Fortunately, this kind of trait implementation boilerplate is ideal for derive macros. Since the language side will be implementing these structs and traits, we'll defer until the relevant interpreter chapter to go over that.

Back to AllocRaw

Now that we have some object and header definitions and constraints, we need to

apply them to the AllocRaw API. We can't allocate an object unless it

implements AllocObject and has an associated constant that implements

AllocTypeId. We also need to expand the interface with functions that the

interpreter can use to reliably get the header for an object and the object

for a header.

We will add an associated type to tie the allocator API to the header type and indirectly to the type identification that will be used.

pub trait AllocRaw {

type Header: AllocHeader;

...

}

Then we can update the alloc() function definition to constrain the types

that can be allocated to only those that implement the appropriate traits.

pub trait AllocRaw {

...

fn alloc<T>(&self, object: T) -> Result<RawPtr<T>, AllocError>

where

T: AllocObject<<Self::Header as AllocHeader>::TypeId>;

...

}

We need the user and the garbage collector to be able to access the header, so we need a function that will return the header given an object pointer.

The garbage collector does not know about concrete types, it will need to be able to get the header without knowing the object type. It's likely that the interpreter will, at times, also not know the type at runtime.

Indeed, one of the functions of an object header is to, at runtime, given an object pointer, derive the type of the object.

The function signature therefore cannot refer to the type. That is, we can't write

pub trait AllocRaw {

...

// looks good but won't work in all cases

fn get_header<T>(object: RawPtr<T>) -> NonNull<Self::Header>

where

T: AllocObject<<Self::Header as AllocHeader>::TypeId>;

...

}

even though it seems this would be good and right. Instead this function will have to be much simpler:

pub trait AllocRaw {

...

fn get_header(object: NonNull<()>) -> NonNull<Self::Header>;

...

}

We also need a function to get the object from the header:

pub trait AllocRaw {

...

fn get_object(header: NonNull<Self::Header>) -> NonNull<()>;

...

}

These functions are not unsafe but they do return NonNull which implies that

dereferencing the result should be considered unsafe - there is no protection

against passing in garbage and getting garbage out.

Now we have an object allocation function, traits that constrain what can be allocated, allocation header definitions and functions for switching between an object and it's header.

There's one missing piece: we can allocate objects of type T, but

such objects always have compile-time defined size. T is constrained to

Sized types in the RawPtr definition. So how do we allocate dynamically

sized objects, such as arrays?

Dynamically sized types

Since we can allocate objects of type T, and each T must derive

AllocObject and have an associated const of type AllocTypeId, dynamically

sized allocations must fit into this type identification scheme.

Allocating dynamically sized types, or in short, arrays, means there's some ambiguity about the type at compile time as far as the allocator is concerned:

- Are we allocating one object or an array of objects? If we're allocating an array of objects, we'll have to initialize them all. Perhaps we don't want to impose that overhead up front?

- If the allocator knows how many objects compose an array, do we want to bake fat pointers into the interface to carry that number around?

In the same way, then, that the underlying implementation of std::vec::Vec is

backed by an array of u8, we'll do the same. We shall define the return type

of an array allocation to be of type RawPtr<u8> and the size requested to be

in bytes. We'll leave it to the interpreter to build layers on top of this to

handle the above questions.

As the definition of AllocTypeId is up to the interpreter, this crate can't

know the type id of an array. Instead, we will require the interpreter to

implement a function on the AllocHeader trait:

pub trait AllocHeader: Sized {

...

fn new_array(size: ArraySize, size_class: SizeClass, mark: Mark) -> Self;

...

}

This function should return a new object header for an array of u8 with the appropriate type identifier.

We will also add a function to the AllocRaw trait for allocating arrays that

returns the RawPtr<u8> type.

pub trait AllocRaw {

...

fn alloc_array(&self, size_bytes: ArraySize) -> Result<RawPtr<u8>, AllocError>;

...

}

Our complete AllocRaw trait definition now looks like this:

pub trait AllocRaw {

/// An implementation of an object header type

type Header: AllocHeader;

/// Allocate a single object of type T.

fn alloc<T>(&self, object: T) -> Result<RawPtr<T>, AllocError>

where

T: AllocObject<<Self::Header as AllocHeader>::TypeId>;

/// Allocating an array allows the client to put anything in the resulting data

/// block but the type of the memory block will simply be 'Array'. No other

/// type information will be stored in the object header.

/// This is just a special case of alloc<T>() for T=u8 but a count > 1 of u8

/// instances. The caller is responsible for the content of the array.

fn alloc_array(&self, size_bytes: ArraySize) -> Result<RawPtr<u8>, AllocError>;

/// Given a bare pointer to an object, return the expected header address

fn get_header(object: NonNull<()>) -> NonNull<Self::Header>;

/// Given a bare pointer to an object's header, return the expected object address

fn get_object(header: NonNull<Self::Header>) -> NonNull<()>;

}

In the next chapter we'll build out the AllocRaw trait implementation.

Implementing the Allocation API

In this final chapter of the allocation part of the book, we'll cover the

AllocRaw trait implementation.

This trait is implemented on the StickyImmixHeap struct:

impl<H: AllocHeader> AllocRaw for StickyImmixHeap<H> {

type Header = H;

...

}

Here the associated header type is provided as the generic type H, leaving it

up to the interpreter to define.

Allocating objects

The first function to implement is AllocRaw::alloc<T>(). This function must:

- calculate how much space in bytes is required by the object and header

- allocate that space

- instantiate an object header and write it to the first bytes of the space

- copy the object itself to the remaining bytes of the space

- return a pointer to where the object lives in this space

Let's look at the implementation.

impl<H: AllocHeader> AllocRaw for StickyImmixHeap<H> {

fn alloc<T>(&self, object: T) -> Result<RawPtr<T>, AllocError>

where

T: AllocObject<<Self::Header as AllocHeader>::TypeId>,

{

// calculate the total size of the object and it's header

let header_size = size_of::<Self::Header>();

let object_size = size_of::<T>();

let total_size = header_size + object_size;

// round the size to the next word boundary to keep objects aligned and get the size class

// TODO BUG? should this be done separately for header and object?

// If the base allocation address is where the header gets placed, perhaps

// this breaks the double-word alignment object alignment desire?

let size_class = SizeClass::get_for_size(total_size)?;

// attempt to allocate enough space for the header and the object

let space = self.find_space(total_size, size_class)?;

// instantiate an object header for type T, setting the mark bit to "allocated"

let header = Self::Header::new::<T>(object_size as ArraySize, size_class, Mark::Allocated);

// write the header into the front of the allocated space

unsafe {

write(space as *mut Self::Header, header);

}

// write the object into the allocated space after the header

let object_space = unsafe { space.offset(header_size as isize) };

unsafe {

write(object_space as *mut T, object);

}

// return a pointer to the object in the allocated space

Ok(RawPtr::new(object_space as *const T))

}

}

This, hopefully, is easy enough to follow after the previous chapters -

self.find_space()is the function described in the chapter Allocating into multiple blocksSelf::Header::new()will be implemented by the interpreterwrite(space as *mut Self::Header, header)calls the std functionstd::ptr::write

Allocating arrays

We need a similar (but awkwardly different enough) implementation for array

allocation. The key differences are that the type is fixed to a u8 pointer

and the array is initialized to zero bytes. It is up to the interpreter to

write into the array itself.

impl<H: AllocHeader> AllocRaw for StickyImmixHeap<H> {

fn alloc_array(&self, size_bytes: ArraySize) -> Result<RawPtr<u8>, AllocError> {

// calculate the total size of the array and it's header

let header_size = size_of::<Self::Header>();

let total_size = header_size + size_bytes as usize;

// round the size to the next word boundary to keep objects aligned and get the size class

let size_class = SizeClass::get_for_size(total_size)?;

// attempt to allocate enough space for the header and the array

let space = self.find_space(total_size, size_class)?;

// instantiate an object header for an array, setting the mark bit to "allocated"

let header = Self::Header::new_array(size_bytes, size_class, Mark::Allocated);

// write the header into the front of the allocated space

unsafe {

write(space as *mut Self::Header, header);

}

// calculate where the array will begin after the header

let array_space = unsafe { space.offset(header_size as isize) };

// Initialize object_space to zero here.

// If using the system allocator for any objects (SizeClass::Large, for example),

// the memory may already be zeroed.

let array = unsafe { from_raw_parts_mut(array_space as *mut u8, size_bytes as usize) };

// The compiler should recognize this as optimizable

for byte in array {

*byte = 0;

}

// return a pointer to the array in the allocated space

Ok(RawPtr::new(array_space as *const u8))

}

}

Switching between header and object

As stated in the previous chapter, these functions are essentially pointer operations that do not dereference the pointers. Thus they are not unsafe to call, but the types they operate on should have a suitably unsafe API.

NonNull is the chosen parameter and return type and the pointer arithmetic

for obtaining the header from an object pointer of unknown type is shown

below.

For our Immix implementation, since headers are placed immediately ahead of an object, we simply subtract the header size from the object pointer.

impl<H: AllocHeader> AllocRaw for StickyImmixHeap<H> {

fn get_header(object: NonNull<()>) -> NonNull<Self::Header> {

unsafe { NonNull::new_unchecked(object.cast::<Self::Header>().as_ptr().offset(-1)) }

}

}

Getting the object from a header is the reverse - adding the header size to the header pointer results in the object pointer:

impl<H: AllocHeader> AllocRaw for StickyImmixHeap<H> {

fn get_object(header: NonNull<Self::Header>) -> NonNull<()> {

unsafe { NonNull::new_unchecked(header.as_ptr().offset(1).cast::<()>()) }

}

}

Conclusion

Thus ends the first part of our Immix implementation. In the next part of the book we will jump over the fence to the interpreter and begin using the interfaces we've defined in this part.

An interpreter: Eval-rs

In this part of the book we'll dive into creating:

- a safe Rust layer on top of the Sticky Immix API of the previous part

- a compiler for a primitive s-expression syntax language

- a bytecode based virtual machine

So what kind of interpreter will we implement? This book is a guide to help you along your own journey and not not intended to provide an exhaustive language ecosystem. The direction we'll take is to support John McCarthy's classic s-expression based meta-circular evaluator1.

Along the way we'll need to implement fundamental data types and structures from scratch upon our safe layer - symbols, pairs, arrays and dicts - with each chapter building upon the previous ones.

While this will not result in an exhaustive language implementation, you'll see that we will end up with all the building blocks for you to take it the rest of the way!

We shall name our interpreter "Eval-rs", for which we have an appropriate illustration generously provided by the author's then 10 year old daughter.

We'll begin by defining the safe abstration over the Sticky Immix interface. Then we'll put that to use in parsing s-expressions into a very simple data structure.

Once we've covered those basics, we'll build arrays and dicts and then use those in the compiler and virtual machine.

These days this is cliché but that is substantially to our benefit. We're not trying to create yet another Lisp, rather the fact that there is a preexisting design of some elegance and historical interest is a convenience. For a practical, accessible introduction to the topic, do see Paul Graham's The Roots of Lisp

Allocating objects and dereferencing safely

In this chapter we'll build some safe Rust abstractions over the allocation API defined in the Sticky Immix crate.

Let's first recall this interface:

pub trait AllocRaw {

/// An implementation of an object header type

type Header: AllocHeader;

/// Allocate a single object of type T.

fn alloc<T>(&self, object: T) -> Result<RawPtr<T>, AllocError>

where

T: AllocObject<<Self::Header as AllocHeader>::TypeId>;

/// Allocating an array allows the client to put anything in the resulting data

/// block but the type of the memory block will simply be 'Array'. No other

/// type information will be stored in the object header.

/// This is just a special case of alloc<T>() for T=u8 but a count > 1 of u8

/// instances. The caller is responsible for the content of the array.

fn alloc_array(&self, size_bytes: ArraySize) -> Result<RawPtr<u8>, AllocError>;

/// Given a bare pointer to an object, return the expected header address

fn get_header(object: NonNull<()>) -> NonNull<Self::Header>;

/// Given a bare pointer to an object's header, return the expected object address

fn get_object(header: NonNull<Self::Header>) -> NonNull<()>;

}

These are the functions we'll be calling. When we allocate an object, we'll get

back a RawPtr<T> which has no safe way to dereference it. This is impractical,

we very much do not want to wrap every dereferencing in unsafe { ... }.

We'll need a layer over RawPtr<T> where we can guarantee safe dereferencing.

Pointers

In safe Rust, mutable (&mut) and immutable (&) references are passed around

to access objects. These reference types are compile-time constrained pointers

where the constraints are

- the mutability of the access

- the lifetime of the access

For our layer over RawPtr<T> we'll have to consider both these constraints.

Mutability

This constraint is concerned with shared access to an object. In other words, it cares about how many pointers there are to an object at any time and whether they allow mutable or immutable access.

The short of it is:

- Either only one

&mutreference may be held in a scope - Or many

&immutable references may be held in a scope

The compiler must be able to determine that a &mut reference is the only

live reference in it's scope that points at an object in order

for mutable access to that object to be safe of data races.

In a runtime memory managed language such as the interpreter we are building, we will not have compile time knowledge of shared access to objects. We won't know at compile time how many pointers to an object we may have at any time. This is the normal state of things in languages such as Python, Ruby or Javascript.

This means that we can't allow &mut references in our safe layer at all!

If we're restricted to & immutable references everywhere, that then means

we must apply the interior mutability pattern everywhere in our design in

order to comply with the laws of safe Rust.

Lifetime

The second aspect to references is their lifetime. This concerns the duration of the reference, from inception until it goes out of scope.

The key concept to think about now is "scope."

In an interpreted language there are two major operations on the objects in memory:

fn run_mutator() {

parse_source_code();

compile();

execute_bytecode();

}

and

fn run_garbage_collection() {

trace_objects();

free_dead_objects();

}

A few paragraphs earlier we determined that we can't have &mut references

to objects in our interpreter.

By extension, we can't safely hold a mutable reference to the entire heap as a data structure.

Except, that is exactly what garbage collection requires. The nature of

garbage collection is that it views the entire heap as a single data structure

in it's own right that it needs to traverse and modify. It wants the

heap to be &mut.

Consider, especially, that some garbage collectors move objects, so that pointers to moved objects, wherever they may be, must be modified by the garbage collector without breaking the mutator! The garbage collector must be able to reliably discover every single pointer to moved objects to avoid leaving invalid pointers scattered around1.

Thus we have two mutually exclusive interface requirements, one that must

only hold & object references and applies interior mutability to the heap

and the other that wants the whole heap to be &mut.

For this part of the book, we'll focus on the use of the allocator and save garbage collection for a later part.

This mutual exclusivity constraint on the allocator results in the statements:

- When garbage collection is running, it is not safe to run the mutator2

- When garbage collection is not running, it is safe to run the mutator

Thus our abstraction must encapsulate a concept of a time when "it is safe to run the mutator" and since we're working with safe Rust, this must be a compile time concept.

Scopes and lifetimes are perfect for this abstraction. What we'll need is some way to define a lifetime (that is, a scope) within which access to the heap by the mutator is safe.

Some pointer types

First, let's define a simple pointer type that can wrap an allocated type T

in a lifetime:

pub struct ScopedPtr<'guard, T: Sized> {

value: &'guard T,

}

This type will implement Clone, Copy and Deref - it can be passed around

freely within the scope and safely dereferenced.

As you can see we have a lifetime 'guard that we'll use to restrict the

scope in which this pointer can be accessed. We need a mechanism to restrict

this scope.

The guard pattern is what we'll use, if the hint wasn't strong enough.

We'll construct some types that ensure that safe pointers such as

ScopedPtr<T>, and access to the heap at in any way, are mediated by an

instance of a guard type that can provide access.

We will end up passing a reference to the guard instance around everywhere. In most cases we won't care about the instance type itself so much as the lifetime that it carries with it. As such, we'll define a trait for this type to implement that so that we can refer to the guard instance by this trait rather than having to know the concrete type. This'll also allow other types to proxy the main scope-guarding instance.

pub trait MutatorScope {}

You may have noticed that we've jumped from RawPtr<T> to ScopedPtr<T> with

seemingly nothing to bridge the gap. How do we get a ScopedPtr<T>?

We'll create a wrapper around RawPtr<T> that will complete the picture. This

wrapper type is what will hold pointers at rest inside any data structures.

#[derive(Clone)]

pub struct CellPtr<T: Sized> {

inner: Cell<RawPtr<T>>,

}

This is straightforwardly a RawPtr<T> in a Cell to allow for modifying the

pointer. We won't allow dereferencing from this type either though.

Remember that dereferencing a heap object pointer is only safe when we are

in the right scope? We need to create a ScopedPtr<T> from a CellPtr<T>

to be able to use it.

First we'll add a helper function to RawPtr<T> in our interpreter crate so

we can safely dereference a RawPtr<T>. This code says that, given an instance

of a MutatorScope-implementing type, give me back a reference type with

the same lifetime as the guard that I can safely use. Since the _guard

parameter is never used except to define a lifetime, it should be optimized

out by the compiler!

pub trait ScopedRef<T> {

fn scoped_ref<'scope>(&self, guard: &'scope dyn MutatorScope) -> &'scope T;

}

impl<T> ScopedRef<T> for RawPtr<T> {

fn scoped_ref<'scope>(&self, _guard: &'scope dyn MutatorScope) -> &'scope T {

unsafe { &*self.as_ptr() }

}

}

We'll use this in our CellPtr<T> to obtain a ScopedPtr<T>:

impl<T: Sized> CellPtr<T> {

pub fn get<'guard>(&self, guard: &'guard dyn MutatorScope) -> ScopedPtr<'guard, T> {

ScopedPtr::new(guard, self.inner.get().scoped_ref(guard))

}

}

Thus, anywhere (structs, enums) that needs to store a pointer to something on

the heap will use CellPtr<T> and any code that accesses these pointers

during the scope-guarded mutator code will obtain ScopedPtr<T> instances

that can be safely dereferenced.

The heap and the mutator

The next question is: where do we get an instance of MutatorScope from?

The lifetime of an instance of a MutatorScope will define the lifetime

of any safe object accesses. By following the guard pattern, we will find

we have:

- a heap struct that contains an instance of the Sticky Immix heap

- a guard struct that proxies the heap struct for the duration of a scope

- a mechanism to enforce the scope limit

A heap struct

Let's make a type alias for the Sticky Immix heap so we aren't referring to it as such throughout the interpreter:

pub type HeapStorage = StickyImmixHeap<ObjectHeader>;

The let's put that into a heap struct, along with any other interpreter-global storage:

struct Heap {

heap: HeapStorage,

syms: SymbolMap,

}

We'll discuss the SymbolMap type in the next chapter.

Now, since we've wrapped the Sticky Immix heap in our own Heap struct,

we'll need to impl an alloc() method to proxy the Sticky Immix

allocation function.

impl Heap {

fn alloc<T>(&self, object: T) -> Result<RawPtr<T>, RuntimeError>

where

T: AllocObject<TypeList>,

{

Ok(self.heap.alloc(object)?)

}

}

A couple things to note about this function:

- It returns

RuntimeErrorin the error case, this type convertsFromthe Sticky Immix crate's error type. - The

whereconstraint is similar to that ofAllocRaw::alloc()but in now we have a concreteTypeListtype to bind to. We'll look atTypeListin the next chapter along withSymbolMap.

A guard struct

This next struct will be used as a scope-limited proxy for the Heap struct

with one major difference: function return types will no longer be RawPtr<T>

but ScopedPtr<T>.

pub struct MutatorView<'memory> {

heap: &'memory Heap,

}

Here in this struct definition, it becomes clear that all we are doing is

borrowing the Heap instance for a limited lifetime. Thus, the lifetime of

the MutatorView instance will be the lifetime that all safe object

access is constrained to.

A look at the alloc() function now:

impl<'memory> MutatorView<'memory> {

pub fn alloc<T>(&self, object: T) -> Result<ScopedPtr<'_, T>, RuntimeError>

where

T: AllocObject<TypeList>,

{

Ok(ScopedPtr::new(

self,

self.heap.alloc(object)?.scoped_ref(self),

))

}

}

Very similar to Heap::alloc() but the return type is now a ScopedPtr<T>

whose lifetime is the same as the MutatorView instance.

Enforcing a scope limit

We now have a Heap and a guard, MutatorView, but we want one more thing:

to prevent an instance of MutatorView from being returned from anywhere -

that is, enforcing a scope within which an instance of MutatorView will

live and die. This will make it easier to separate mutator operations and

garbage collection operations.

First we'll apply a constraint on how a mutator gains heap access: through a trait.

pub trait Mutator: Sized {

type Input;

type Output;

fn run(&self, mem: &MutatorView, input: Self::Input) -> Result<Self::Output, RuntimeError>;

// TODO

// function to return iterator that iterates over roots

}

If a piece of code wants to access the heap, it must implement this trait!

Secondly, we'll apply another wrapper struct, this time to the Heap type.

This is so that we can borrow the heap member instance.

pub struct Memory {

heap: Heap,

}

This Memory struct and the Mutator trait are now tied together with a

function:

impl Memory {

pub fn mutate<M: Mutator>(&self, m: &M, input: M::Input) -> Result<M::Output, RuntimeError> {

let mut guard = MutatorView::new(self);

m.run(&mut guard, input)

}

}

The key to the scope limitation mechanism is that this mutate function is

the only way to gain access to the heap. It creates an instance of

MutatorView that goes out of scope at the end of the function and thus

can't leak outside of the call stack.

An example

Let's construct a simple example to demonstrate these many parts. This

will omit defining a TypeId and any other types that we didn't discuss

above.

struct Stack {}

impl Stack {

fn say_hello(&self) {

println!("I'm the stack!");

}

}

struct Roots {

stack: CellPtr<Stack>

}

impl Roots {

fn new(stack: ScopedPtr<'_, Stack>) -> Roots {

Roots {

stack: CellPtr::new_with(stack)

}

}

}

struct Interpreter {}

impl Mutator for Interpreter {

type Input: ();

type Output: Roots;

fn run(&self, mem: &MutatorView, input: Self::Input) -> Result<Self::Output, RuntimeError> {

let stack = mem.alloc(Stack {})?; // returns a ScopedPtr<'_, Stack>

stack.say_hello();

let roots = Roots::new(stack);

let stack_ptr = roots.stack.get(mem); // returns a ScopedPtr<'_, Stack>

stack_ptr.say_hello();

Ok(roots)

}

}

fn main() {

...

let interp = Interpreter {};

let result = memory.mutate(&interp, ());

let roots = result.unwrap();

// no way to do this - compile error

let stack = roots.stack.get();

...

}

In this simple, contrived example, we instantiated a Stack on the heap.

An instance of Roots is created on the native stack and given a pointer

to the Stack instance. The mutator returns the Roots object, which

continues to hold a pointer to a heap object. However, outside of the run()

function, the stack member can't be safely accesed.

Up next: using this framework to implement parsing!

This is the topic of discussion in Felix Klock's series GC and Rust which is recommended reading.

while this distinction exists at the interface level, in reality there are multiple phases in garbage collection and not all of them require exclusive access to the heap. This is an advanced topic that we won't bring into consideration yet.

Tagged pointers and object headers

Since our virtual machine will support a dynamic language where the compiler does no type checking, all the type information will be managed at runtime.

In the previous chapter, we introduced a pointer type ScopedPtr<T>. This

pointer type has compile time knowledge of the type it is pointing at.

We need an alternative to ScopedPtr<T> that can represent all the

runtime-visible types so they can be resolved at runtime.

As we'll see, carrying around type information or looking it up in the header on every access will be inefficient space and performance-wise.

We'll implement a common optimization: tagged pointers.

Runtime type identification

The object header can always give us the type id for an object, given a pointer to the object. However, it requires us to do some arithmetic on the pointer to get the location of the type identifier, then dereference the pointer to get the type id value. This dereference can be expensive if the object being pointed at is not in the CPU cache. Since getting an object type is a very common operation in a dynamic language, these lookups become expensive, time-wise.

Rust itself doesn't have runtime type identification but does have runtime dispatch through trait objects. In this scheme a pointer consists of two words: the pointer to the object itself and a second pointer to the vtable where the concrete object type's methods can be looked up. The generic name for this form of pointer is a fat pointer.

We could easily use a fat pointer type for runtime type identification in our interpreter. Each pointer could carry with it an additional word with the type id in it, or we could even just use trait objects!

A dynamically typed language will manage many pointers that must be type identified at runtime. Carrying around an extra word per pointer is expensive, space-wise, however.

Tagged pointers

Many runtimes implement tagged pointers to avoid the space overhead, while partially improving the time overhead of the header type-id lookup.

In a pointer to any object on the heap, the least most significant bits turn out to always be zero due to word or double-word alignment.

On a 64 bit platform, a pointer is a 64 bit word. Since objects are at least word-aligned, a pointer is always be a multiple of 8 and the 3 least significant bits are always 0. On 32 bit platforms, the 2 least significant bits are always 0.

64..............48..............32..............16...........xxx

0b1111111111111111111111111111111111111111111111111111111111111000

/ |

/ |

unused

When dereferencing a pointer, these bits must always be zero. But we can use them in pointers at rest to store a limited type identifier! We'll limit ourselves to 2 bits of type identifier so as to not complicate our code in distinguishing between 32 and 64 bit platforms1.

Given we'll only have 4 possible types we can id directly from a pointer, we'll still need to fall back on the object header for types that don't fit into this range.

Encoding this in Rust

Flipping bits on a pointer directly definitely constitutes a big Unsafe. We'll

need to make a tagged pointer type that will fundamentally be unsafe because

it won't be safe to dereference it. Then we'll need a safe abstraction over

that type to make it safe to dereference.

But first we need to understand the object header and how we get an object's type from it.

The object header

We introduced the object header traits in the earlier chapter Defining the allocation API. The chapter explained how the object header is the responsibility of the interpreter to implement.

Now that we need to implement type identification, we need the object header.

The allocator API requires that the type identifier implement the

AllocTypeId trait. We'll use an enum to identify for all our runtime types:

#[repr(u16)]

#[derive(Debug, Copy, Clone, PartialEq)]

pub enum TypeList {

ArrayBackingBytes,

ArrayOpcode,

ArrayU8,

ArrayU16,

ArrayU32,

ByteCode,

CallFrameList,

Dict,

Function,

InstructionStream,

List,

NumberObject,

Pair,

Partial,

Symbol,

Text,

Thread,

Upvalue,

}

// Mark this as a Stickyimmix type-identifier type

impl AllocTypeId for TypeList {}

Given that the allocator API requires every object that can be allocated to

have an associated type id const, this enum represents every type that

can be allocated and that we will go on to describe in this book.

It is a member of the ObjectHeader struct along with a few other members

that our Immix implementation requires:

pub struct ObjectHeader {

mark: Mark,

size_class: SizeClass,

type_id: TypeList,

size_bytes: u32,

}

The rest of the header members will be the topic of the later garbage collection part of the book.

A safe pointer abstraction

A type that can represent one of multiple types at runtime is obviously the

enum. We can wrap possible ScopedPtr<T> types like so:

#[derive(Copy, Clone)]

pub enum Value<'guard> {

ArrayU8(ScopedPtr<'guard, ArrayU8>),

ArrayU16(ScopedPtr<'guard, ArrayU16>),

ArrayU32(ScopedPtr<'guard, ArrayU32>),

Dict(ScopedPtr<'guard, Dict>),

Function(ScopedPtr<'guard, Function>),

List(ScopedPtr<'guard, List>),

Nil,

Number(isize),

NumberObject(ScopedPtr<'guard, NumberObject>),

Pair(ScopedPtr<'guard, Pair>),

Partial(ScopedPtr<'guard, Partial>),

Symbol(ScopedPtr<'guard, Symbol>),

Text(ScopedPtr<'guard, Text>),

Upvalue(ScopedPtr<'guard, Upvalue>),

}

Note that this definition does not include all the same types that were

listed above in TypeList. Only the types that can be passed dynamically at

runtime need to be represented here. The types not included here are always

referenced directly by ScopedPtr<T> and are therefore known types at

compile and run time.

You probably also noticed that Value is the fat pointer we discussed

earlier. It is composed of a set of ScopedPtr<T>s, each of which should

only require a single word, and an enum discriminant integer, which will