Peter Liniker

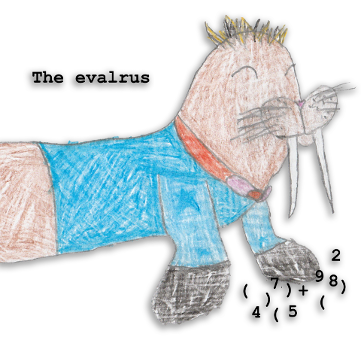

[ about ] - [ blog ]“Evalrus?”

queried my ten year old daughter. I could see she was having difficulty with lexical analysis of my project’s name. After a brief but lengthy explanation it was obvious that we should have a mascot for this project. She and I collaborated to come up with a suitable animal. Here is the result:

From now on, the RPL prompt will say evalrus:001>.

Tokenizing

For lexical analysis I decided not to take the easy regex way out. I want to

learn how to do this myself so I’m going to tokenize the input String with

my own state machine. Here are the building blocks I’ve resulted in after

a couple attempts.

The tokenize() function signature takes a String of source code and returns

Result<Vec<Token>, ParseError> where Token is:

struct Token {

pos: SourcePos,

token: TokenType,

}

// line number and character number

type SourcePos = (u32, u32);

// very simple for now, not even numbers

enum TokenType {

OpenBracket,

CloseBracket,

Symbol(String),

}SourcePos represents the line number and character position in the line of the

token and I expect we’ll be propagating these numbers throughout to helpfully

report errors.

The inner state machine loop looks like the below code section. I’ve

very sensibly decided that tab characters are not going to be valid indentation

so this is an opportunity to use the ParseError type.

loop {

match current {

Some(TAB) =>

return Err(ParseError::new(

(lineno, charno),

String::from("tabs are not valid whitespace"))),

Some(SPACE) => current = chars.next(),

Some(OPEN_BRACKET) => {

tokens.push(Token::new((lineno, charno), OpenBracket));

current = chars.next();

}

Some(CLOSE_BRACKET) => {

tokens.push(Token::new((lineno, charno), CloseBracket));

current = chars.next();

}

// EOL

None => break,

}

charno += 1;

}The above code handles single character tokens (brackets, whitespace) and the single invalid tab-character case.

Multi-character tokens must be consumed one character at a time until a terminating character is reached. Terminating characters are any that cannot be part of a symbol (in this case, brackets and whitespace.)

For this I have a quick and simple closure that returns true if the given character is in a list of characters that indicate the end of the symbol:

let terminating = [OPEN_BRACKET, CLOSE_BRACKET, SPACE, TAB];

let is_terminating = |c: char| terminating.iter().any(|t| c == *t);I’m not so great at recognizing the optimal Rustacious Iterator use patterns

so perhaps there’s a more concise way of expressing that? In Python I’d just say

if c in terminating:....

I’ve pulled out the match branch for symbols to highlight it separately below:

Some(non_terminating) => {

let symbol_begin = charno;

let mut symbol = String::from("");

symbol.push(non_terminating);

// consume symbol

loop {

current = chars.next();

if let Some(c) = current {

if is_terminating(c) {

break;

} else {

symbol.push(c);

charno += 1;

}

} else {

break;

}

}

// complete symbol

tokens.push(Token::new((lineno, symbol_begin), Symbol(symbol)));

}I’m not totally happy that that code is as pretty as it could be, the loop and break conditions could surely be refactored. Suggestions welcome!

The final state of the code at this point is tagged here.

Up next…

This is the first code update for which there are #[test]s and I expect

to continue writing tests from now on.

The next step is parsing the tokens into a syntax tree. Since this is a Lisp, it’s going to simply consist of nested cons cells and symbols for now. In future stages we’ll add more types such as strings and numbers, adding syntax for them too.

Ack

Thanks to Bob Nystrom’s excellent new series on interpreters, which I scanned (haha) to help find a better code structure for the lexing state machine than my first attempt.